The Generative AI Dawn: The Promises, Opportunities, Challenges, and A Way Forward

Exploring the Potential Impact of Large Language Models on the Future of Medicine

This blog is inspired by topics covered in Episode 11: Exploring AI, ChatGPT and Clinical Informatics with Professor Nigam Shah.

If you haven’t listened to it yet, check it out on Apple, Spotify, Substack or wherever you get your podcasts.

Introduction.

The field of Artificial Intelligence (AI) has experienced booms and busts over the past six decades. Busts, often referred to as ‘AI winters’, marked downturns in attention, funding and research, often prompted when research areas hit a dead end or results don’t live up to expectations.

The 2000s saw an upturn in AI progress and a further boom with the release of Open AI’s ChatGPT3 in 2022. This new chatbot represented a paradigm shift in AI development with its remarkable ability to understand and generate complex and fluent human-like text. Additionally, this model (and others) have shown curious emergent capabilities that expand the possibilities of this technology. It has resulted in ChatGPT becoming the fastest-growing app to reach 100 million users1.

Since then there has been a gold rush by technology companies and startups publishing their AI-powered chatbots including Google (Gemini), Microsoft (Co-pilot), Meta (Llama) and Anthropic (Claude). Many of these companies have further doubled down on AI, integrating these tools into their core products and services. Now many models are being rapidly updated and refined, with some becoming multimodal models which include impressive text-to-photo and video generation.

See OpenAI’s text-to-video model below.

Decoding AI.

AI is a multidisciplinary field with deep roots in statistics and computer science but it also crosses over into philosophy, neuroscience and psychology (see the great book “God, Human, Animal, Machine).

To land closer to a definition, it’s best to first recognise that different audiences apply different assumptions and levels of abstraction to the topic. That is, some may assume AI is replicating human thinking, while others that it is just a rules-based system.

These extremes can send our intuitions in different directions, as the first implies AI has human-like thinking, which can then be entangled with our conceptions of human consciousness, reasoning and ethics. The second definition is so simple that it includes something not typically described as AI, such as the humble calculator.

Additionally, there are many different types of AI models, all with differing humanness, use cases, strengths and weaknesses. This means pinpointing exactly what someone means by ‘AI’ is important to understand its utility and limitations. Often that requires time to clearly define what is meant to ensure it is a meaningful term. The point here is to highlight that we must all consider important factors and assumptions when talking about AI.

Given the challenges with definitions, understanding some of the conceptual foundations of AI may shed more light on how we can best understand them.

AI Concepts.

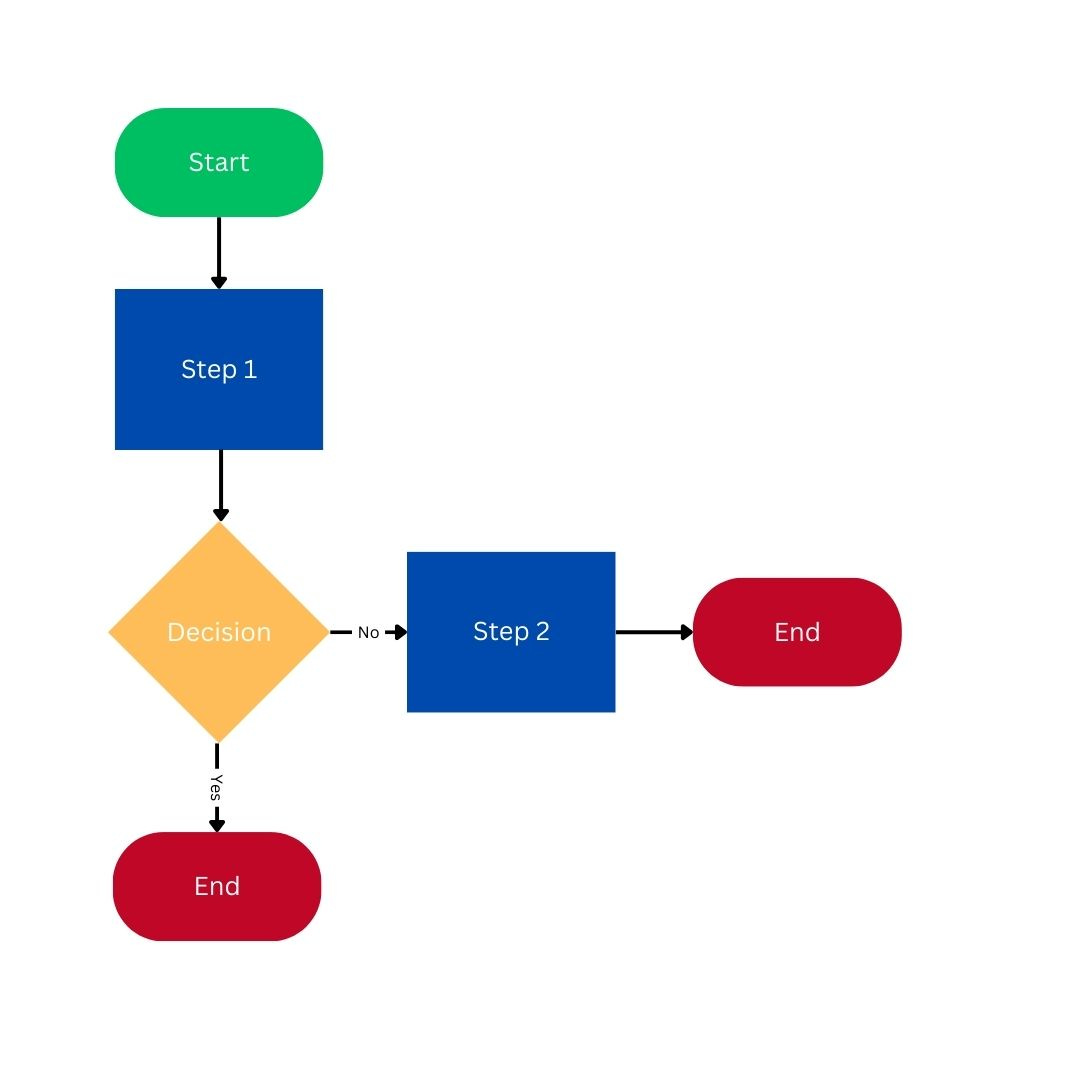

The term algorithm can be traced back to the Persian polymath Al-Khwārizmī (825 CE), who wrote of the power of process-based problem-solving in mathematics. These step-by-step instructions, much like a recipe, are the backbone of AI.

More complex algorithms use statistical methods and specific feedback mechanisms to enhance the algorithm’s accuracy. This resulted in improvements in the model without explicit programming - in essence the algorithm learns, hence this field is called Machine Learning.

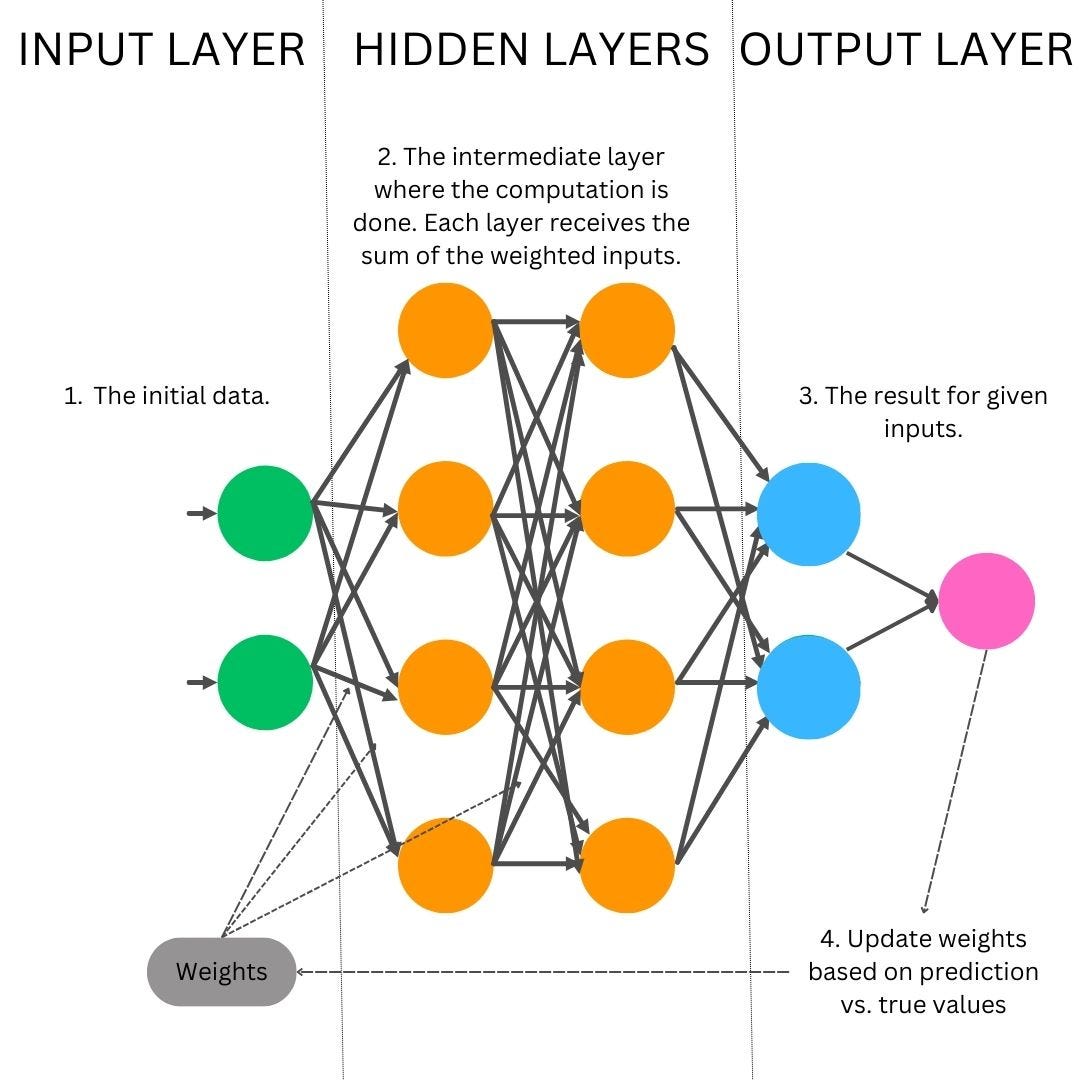

A type of Machine learning, called Neural Networks, are algorithms that mimic the brain's architecture with ‘weights’ in each layer that adjust the input. Deep learning is stacked neural networks that generate new content, which is the basis of Generative AI.

Large Pre-trained Transformer Language Models or simply Large Language Models (LLM), are a type of generative AI. ChatGPT is the web application that runs on the LLM model ‘GPT3.5’ or ‘GPT4’ by Open AI.

G.P.T. stands for Generative Pre-train Transformer. These models generate new text by making predictions about the next word in a sentence, by being pretrained on immense amounts of data, through a transformer architecture2.

Pretraining is via a method called semi-supervised training which uses a combination of billions (or trillions) of unlabelled data, with a smaller amount of human-labelled data. This human contribution is expensive and time-consuming, which contributes to the high investment into this technology, which is also why transformers were such a breakthrough.

Transformers are a learning architecture that uses deep learning (stacked neural networks) and several key processes; Embedding, Positional Encoding, Self-Attention and Feedforward.

This process can be run in parallel, greatly reducing the cost and effort.

For more detailed descriptions of transformers, and to learn about ‘attention’ - a key feature of transformers, I’d recommend reading:

AI potential Healthcare.

AI offers the potential to revolutionise healthcare across multiple avenues, including streamlining processes, improving decision making and enhancing person-centred care.

Many AI-based systems have already made advancements into healthcare, with are used daily. This includes clinical decision support tools and as well as AI-powered radiology software. However, many have failed to live up to their revolutionary expectations3 , this includes the high-profile bankruptcy of a 4 billion dollar global AI healthcare company4.

The recent batch of LLMs has set out to change this space and have already created waves in their potential in healthcare due to their ability to perform well in medical exams5 and appear more empathic than doctors when responding to text from patients6. However, the assumption that this translates to real-world settings will need further evaluation.

One aspiration for AI would be novel human-AI interfaces. LLM-based virtual assistants could manage appointments, order prescriptions, and summarise important information from patient records in the appropriate language and at the appropriate level of understanding. For clinicians, virtual clinical assistants could reduce repetitive tasks and cognitive load, while helping clinicians navigate the ocean of clinical data, only surfacing relevant and critical information.

There are also some humbler yet still incredibly valuable opportunities that would impact the health and well-being of communities, such as simplifying access to government benefits, insurance, and services. This could also improve facilitating interagency coordination across various private and public health, social and well-being services. In addition, the ability to connect the tsunami of clinical and health data to create meaningful and actionable insights for providers, analysts, policymakers and patients.

While the possibility of LLMs seems endless, the real-world application and evaluations of these models will need high standards to ensure they live up to expectations on lowering cost and improving access, safety, quality, effectiveness, experience and equity.

AI challenges in Healthcare.

Despite the potential benefits, there are significant challenges surrounding AI in healthcare. Gaps in medical knowledge, and historical and social structures, can lead to biases being baked into the data that AI systems use, potentially perpetuating these biases. These biases can be blanketed over by confident-sounding responses from LLMs, poor quality control, or obscured by ‘black-box’ models that are not transparent. There are already many algorithms in use all over our society, automating biases - see the book “Weapons of Math Destruction”.

One of the hardest tasks in medicine is holding and managing risk. This is something usually done by experienced clinicians who are attempting to appropriately balance and apply a complex assortment of clinical reasoning, patient needs, operational considerations and resource allocation. AI systems may struggle with this task given their lack of grounded ethical and values-based reasoning. This may result in increased utilisation of inappropriate tests and treatments, leading to a rise in low-value care causing harm to patients and a strain on already limited resources.

Current LLMs excel at appearing human-like in their communication. Given our evolutionary predisposition to be particularly sensitive to human-like qualities, we are especially vulnerable to misattributing characteristics typically reserved for human consciousness, such as agency. This anthropomorphization has the consequence of us applying a higher level of trust in these systems, leading to complacency that could create considerable risk. There are well-documented occurrences of LLMs hallucinating, where LLMs put out coherent and grammatically correct outputs, but are nonsensical. If left unchecked these could lead to unfathomable medical errors that could be catastrophic for patients.

A Way Forward.

As AI continues to advance rapidly, it is essential to approach its integration into healthcare with a balanced perspective. Adopting an attitude of cautious optimism may ensure these tools seek our grandest problems while being held to appropriate standards.

With this in mind, it is reasonable to continue to ask fundamental questions of any new AI tool.

What is the problem we are trying to solve? (Define the problem and what success looks like before picking the tools)

Does this solve that problem in our practice and how do we know? (Real-world application of AI on your data)

What is and isn’t it for? (Limitations, bias, fairness)

What happens when it doesn’t work and how do we know? (Transparency, explanation, inspectable, quality and safety)

“The purpose remains the same, which is we want to use as much data as we can, to decide whether to act and how to act” - Professor Nigam Shah

Individual clinicians should familiarise themselves with these tools and develop new skills to understand their applications and limitations. These models will still need experts to decide what to ask, provide context, compensate for weaknesses and evaluate the quality of the results.

At the institutional and government level, new digital health strategies and policies are required to provide adequate safety guardrails for this new technology, including best practice guidelines, registers, public review and regulation - see Regulatory Considerations on AI for Health by WHO. Groups like the Coalition for Health AI are useful guides for the responsible use of AI in healthcare.

Implementing these changes will require new roles, relationships and skills, as well as a cultural shift in the application of technology in healthcare - where some have recommended a bottom-up approach7. Additionally, new AI infrastructure for the management and quality control of real-world AI models will be necessary.

Summary.

“The question is, will the impact of LLMs and similar kinds of AI be as big as smartphones or as big as electricity” - Sean Carroll’s Mindscape podcast

While it is hard to pinpoint the exact magnitude of impact these new tools will have - it is clear that Generative AI is here and presents tantalising opportunities in healthcare. However, while the applications appear limitless, it does not follow exactly which AI tools will be useful, effective or safe.

Individuals and institutions need to support the rapid upskilling of clinicians, to ensure that core pillars of medical ethics are applied and that healthcare remains a relational endeavour between humans, albeit augmented by technology. This will involve re-examining our clinical education, training and work environments.

Proactive governments and institutions must create the infrastructure to support responsible and accountable AI tools and services. This should also include stewarding policy and innovation to focus on neglected areas and communities.

AI offers a unique opportunity for us to ask fundamental questions about the nature of the healthcare system, including its structure and delivery. It will create more questions than answers. As a tool, it is formidable but it still depends on how we decide to use it. As clinicians, we have a responsibility to take a leadership role in shaping these decisions. This involves advocating for the co-creation of solutions, and ensuring the voices of our patients and the public are included. By engaging all stakeholders, we can work towards developing tools that truly serve the needs of our community and align with the core values of a collective human-centred healthcare system.

Thank you for reading this blog about AI. If you’ve enjoyed it, show your support by writing a comment below, what did I get right or wrong? How do you think AI will impact healthcare?

Take care,

Jono

https://www.reuters.com/technology/chatgpt-sets-record-fastest-growing-user-base-analyst-note-2023-02-01/

Vaswani A et al “Attention Is All You Need”. 2017 https://proceedings.neurips.cc/paper_files/paper/2017/file/3f5ee243547dee91fbd053c1c4a845aa-Paper.pdf

Huisman M et al “The emperor has few clothes: a realistic appraisal of current AI in radiology”. European Radiology. 2024. https://link.springer.com/article/10.1007/s00330-024-10664-0

https://www.wired.com/story/babylon-health-warning-ai-unicorns/

ChatGPT Outscores Medical Students on Complex Clinical Care Exam Questions By Adam Hadhazy. 2023. https://hai.stanford.edu/news/chatgpt-out-scores-medical-students-complex-clinical-care-exam-questions

Ayers J et al “Comparing Physician and Artificial Intelligence Chatbot Responses to Patient Questions Posted to a Public Social Media”. JAMA Intern Med 2023. https://jamanetwork.com/journals/jamainternalmedicine/article-abstract/2804309

Jinal J et al “Ensuring Useful Adoption of Generative Artificial Intelligence in Healthcare”. Journal of the American Medical Informatics Association. 2024. https://academic.oup.com/jamia/advance-article/doi/10.1093/jamia/ocae043/7624147?guestAccessKey=f5302758-4730-49ab-b40b-e7eb838e5a62&login=true

Nicely written explainer! I wonder if you should rewrite it now just 10 months later with the speed of the development of AI. I found this one interesting, even this is a few months old now - https://newatlas-com.cdn.ampproject.org/c/s/newatlas.com/technology/google-med-gemini-ai/?amp=true